July

2020

HYDROCARBON

ENGINEERING

21

traditional techniques, such as linear regression and various

‘rules of thumb’. Refiners tend to clean the most fouled

bundles rather than the bundle with the greatest economic

value. CPM uses Petro-SIM and the most advanced prediction

techniques to project future fouling factors. It uses rigorous

heat and material balances to calculate the right bundle to

clean based on economic outcomes. The CPM predictions

and simulations empower the refinery’s asset management

teams to determine the right bundles to clean at the right

time (Figure 1).

CPM provides refinery operators with the capability to

predict future fouling in preheat trains several months in

advance. This allows them to reduce energy consumption

and avoid throughput-limiting conditions before they occur.

In addition, the solution provides insight on how to

optimise heat exchanger cleaning schedules to maximise

the return on investment.

Digital

Until recently, operators used process simulation models for

activities such as basic design, unit optimisation, crude

selection, and operator training. The simulations existed in

isolation of plant data models that the operator hosted in

information management systems. Users operating off the

plant’s information management systems have historically

been unable to directly access the value of the site’s process

simulation tools, and vice versa.

However, enhanced connectivity has rectified this by

permitting increased contextualisation of data and

multi-purpose use of single models. With added context and

relationship, more plant process data measurements can

constitute information. Ever-increasing context,

connectedness, and patterns can generate more insight for a

greater number of consumers.

With this IT foundation and by expanding upon data

management capabilities, process simulation tools have now

become a source for sustained value delivery. They provide the

answer to operating companies seeking digitalisation solutions.

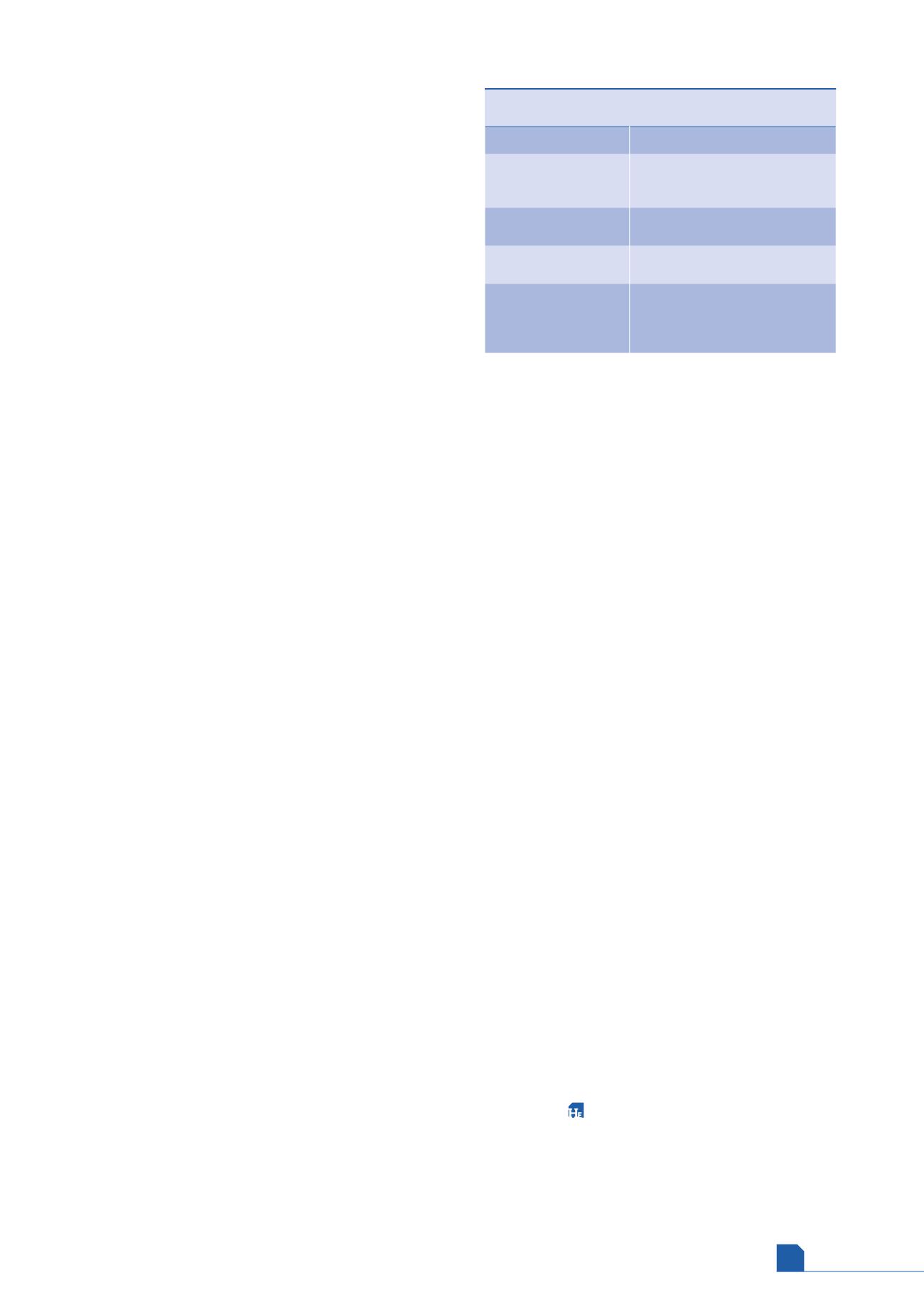

Next generation process simulation tools can be

operationalised into digital twins with real-time production

data. By using this data from sites’ distributed control systems,

historian, and laboratory information systems, the digital twin

enables quick and informed decisions. The digital twin delivers

real-time, high-fidelity, virtual representations of hydrocarbon

molecule transformation and associated plant operating

conditions. All outputs of the digital twin are written into fully

historized relational databases for data mining. Furthermore,

the digital twin automatically validates mass balances and

reconciles process data, closing the value gap between

production plan and actual. Using the simulator in this way

enables real-time asset performance monitoring, surveillance,

supply chain optimisation, and other advanced applications

and services (Table 1).

Any changes to a data point or stream triggers automatic

notification to the simulation model. All model outputs

automatically write back, in real-time, thereby enhancing the

quality and richness of historian data. This includes

comparison of measured vs simulation model vs linear

programming model outputs to help track when models and

actual plant performance diverge.

Case study 3

Implementing a refinery digital twin is a key project that is part

of a European integrated oil company’s digital transformation

efforts. The digital twin outcomes will involve process

improvements that maximise production while optimising

energy consumption.

The refinery digital twin project integrates KBC’s

Petro-SIM process simulation software and OSIsoft PI-AF. By

leveraging existing simulation models with the latest ability

to connect with PI-AF, the operator will be able to closely

monitor the units.

As a result of continuous monitoring, the operator was able

to update the models in an agile manner. It achieved its goal of

generating more reliable production plans, allowing the

operator to identify pattern changes in the behaviour of the

main units, e.g. operational variations or catalyst cycle changes.

It is then possible to optimise the main process unit’s

performance by alerting when there are deviations between

actual data, unit simulator data, and planning data.

Entering a digital collaboration is difficult yet exciting. It

requires a company to recognise that it is lacking in certain

skills that partners can bring to the table. There must be value

to both sides. The obvious question then, if there is value, is

why not build that knowledge and skill in house?

There are times when acquisition is the right answer, while

other times a partnership is preferable. Ultimately, it is a

business decision about resources, priorities, and cost. It is

important to recognise that a company does not just buy

technology, but also the culture. Picking business partners

based on their capabilities and shared digitalisation values and

philosophy is the best method for success.

To further enhance collaboration success, the digital

process simulator should be built ‘open’ and with connectable

tools. It creates a modular digital twin that allows those who

want to progress further access to a full technology stack, each

built by experts that are passionate about their area of study.

User experience is also important. Understanding how they

want to interact with the technology stack plays a

development role. Being adaptable to all the stakeholders is

key to success.

Reference

1. ‘Achieving fullstream digitalization with continuous process

management’, Baker Hughes whitepaper, (2018),

https://www.bhge.com/document/white-paper-accenture-and-kbc-achieving-fullstream-

digitalization-continuous-process-management

Table 1.

Always aligned with and driving the

business data model

Traditional simulator

Digital twin

An accurate

representation of a

specific operating case

An accurate representation of

the asset over its full range of

operation, all of the time

Static provision of a

snapshot in time

Captures the full history and future

of the assset

Built on an ad-hoc basis

to answer a question

Automated, regular model runs.

Built-in to business workflows

Owned and used by

isolated groups on an

ad-hoc basis

Centralised single version of the

truth, everyone uses, delivers

outputs directly to the business,

strong governance systems